Between Reflection and Bias: Designing Philosophical AI for Ethical Dialogue

Project Overview

People increasingly rely on generative AI when thinking through difficult moral choices in areas like healthcare, law, and education.

Research Question

How can conversational AI help users examine their reasoning rather than simply confirm existing beliefs?

Approach

I designed six AI personas grounded in ethical traditions such as utilitarianism, Kantian ethics, Confucianism, Buddhism, and Christianity.

All personas used the same underlying model but differed in moral framing, encouraging reflection rather than direct answers.

Study Design

Qualitative HCI study

- 21 participants (ages 20–26)

- 12 real-world moral dilemmas across multiple domains

- Think-aloud protocol & post-session interviews

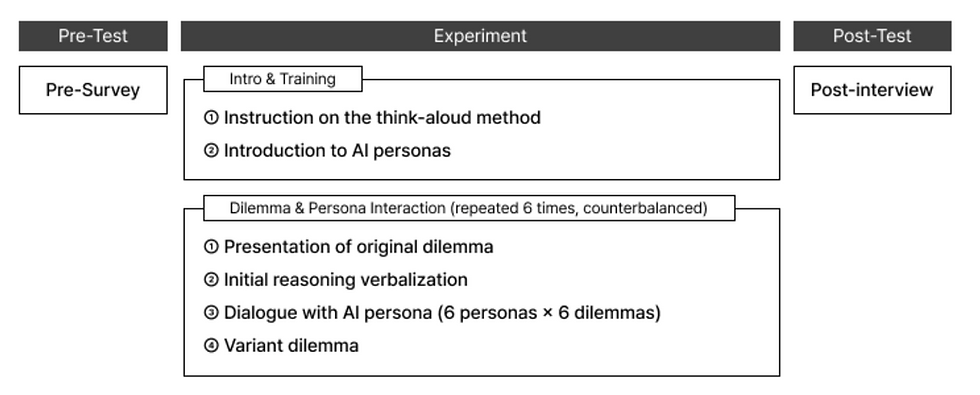

Study procedure showing pre-test, repeated dilemma–persona interactions, and post-interview.

Key Findings

1. Reflection often turned into validation

2. Trust depended on strict persona consistency

3. Culture shaped trust, emotion shaped decisions

Design Implications

Reflection requires more than disagreement: trust depends on consistent personas, and ethical reasoning is shaped by emotion, context, and cultural familiarity.

Example moral dilemma and its contextual variant used in the study.